Kia Karavas

THE KPG

SPEAKING TEST: DEFINING

CHARACTERISTICS

It is

generally acknowledged that

the

assessment of speaking is one of

the most challenging and complex

areas of assessment. This is due

to various reasons including: a)

the difficulty of developing an

operational construct definition

of oral performance that can

capture the richness of oral

communication and human

interaction; b)

the

multiplicity of communication

skills required for oral

interaction –skills that do not

lend themselves easily to

objective assessment; c) the

range of factors that influence

our impression of how well

someone can speak a language

(cf.

Fulcher 2003, Kitao and Kitao

1996, Luoma 2004).

Of course, despite the

difficulties involved in

assessment, speaking is one of

the most common human

activities, the development of

the ability to perform orally an

important part of foreign

language programmes and,

therefore, important in language

testing. The KPG exam battery

considers the assessment of

speaking has equal weight as all

the other areas of communication

–reading and listening

comprehension, as well as

writing performance.

Speaking is assessed in Module 4

(oral production and mediation)

of the KPG exams, at all levels.

The development of the speaking

test is based on clearly laid

out test specifications (which

all languages certified

through

the KPG examination system

follow).[1]

For

the design of test items and

tasks, the KPG candidates’ age,

first language, language

learning background and other

sociocultural factors are taken

into account. The test items

and tasks undergo rigorous and

systematic pre- and pilot

testing and evaluation (by oral

examiners, KPG candidates and

trained judges) on the basis of

which changes, improvements and

fine tuning of the test items

are made. Moreover, research is

carried out by the RCeL,[2]

focusing on the construct

validity and effectiveness of

the test as a whole.

The KPG exams

adhere to a functional approach

to language use and set out,

throughout all modules, to

evaluate socially purposeful

language use, which entails a

certain amount of social and

school literacy. Within the KPG

exam battery, language is viewed

as social practice embedded in

the sociocultural context by

which it is produced. This view

of language is reflected in the

design of the speaking tasks and

in the assessment criteria.

The tasks

rubrics always make the context

of situation

explicit, since candidates are

expected to produce socially

meaningful language given the

social context. The sample task

below (from the B2 level exam of

May 2007) illustrates this.

|

|

Speaking Task

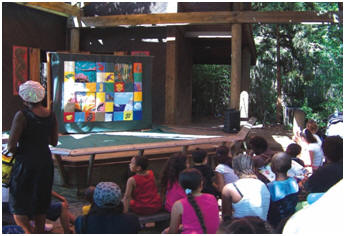

Look at this photo.

Imagine you have taken

your 4 year-old niece

for a walk in the centre

of town and you come

across this event.

Explain what’s

happening, and why the

event is taking place.

|

As one can

see, the rubrics for the task

above define, (a) the purpose of

communication (to explain what’s

happening), (b) the content of

communication (to describe the

event), (c) who the participants

in the communicative event are

and what their relationship is

(adult-child, uncle/aunt

–niece), (d) what the setting of

the communicative event is (in

the city centre), and (e) what

the channel of communication is

(face to face interaction).

Given the expectations in

performance, one of the

assessment criteria for all

levels is “sociolinguistic

competence,” which simply means

that the candidate is expected

to make lexical and grammatical

choices which are appropriate

for the situation as determined

by the task.[3]

The KPG

Speaking test

The table below presents

the content and structure of the

speaking test for the exams now

administered by the KPG. As the

reader can see, they share

similarities. However, they

differ decisively in terms of

task difficulty, linguistic

complexity of the expected

output and linguistic complexity

of the source texts.

|

A1+A2 LEVEL SPEAKING

TEST |

|

Target population |

Young candidates aged

10-15 years |

|

Duration of the test |

20 minutes |

|

Pattern of participation |

Candidates are tested in

pairs but do not

converse with each

other. |

|

Test content |

|

Dialogue

(5 minutes for both

candidates )

This is a “getting to

know you” task, which

requires interaction

between Examiner and

candidate. Each

candidate is asked four

(4) questions – two for

A1 and two for A2 level

– which are signposted

for the examiner. |

|

Talking about photos

(5 minutes for both

candidates)

This activity

essentially involves a

guided description of a

photo or series of

photos (or other visuals

e.g. sketches, drawings)

which are thematically

linked. The activity

comprises four (4)

questions – two for A1

and two for A2 level.

|

|

Giving and asking for

information

(5 minutes for both

candidates)

This

activity is based on

multimodal texts and

also consists of two

parts and five (5)

questions in total –two

for A1 and three for A2

level. |

|

B1+B2 LEVEL SPEAKING

TEST |

|

Target population |

Candidates aged 15+ |

|

Duration of the test |

15-20 minutes |

|

Pattern of participation |

Candidates are tested in

pairs but do not

converse with each

other. |

|

Test content

|

|

Dialogue

(3-4 minutes) for both

candidates between

examiner and each

candidate who answers

questions about

him/herself and his/her

environment posed by the

examiner. |

|

One-sided talk

(5-6 minutes for both

candidates) by each

candidate who develops a

topic on the basis of a

visual prompt. |

|

Mediation

(6 minutes for both

candidates) by each

candidate who develops a

topic based on input

from a Greek text. |

|

C1 LEVEL

SPEAKING TEST |

|

Target population |

Candidates aged 15+ |

|

Duration of the test |

20 minutes |

|

Pattern of participation |

Candidates are tested in

pairs but do not

converse with each

other. |

|

Test content |

|

Warm-up

(not assessed – 1 minute

per candidate) Examiner

asks each candidate a

few ice-breaking

questions (age,

studies/work, hobbies) |

|

Open-ended response

(4 minutes for both

candidates): The

candidate responds to a

single question posed by

the examiner expressing

and justifying his/her

opinion about a

particular issue/topic. |

|

Mediation and open-ended

conversation

(15 minutes for both

candidates): Candidates

carry out a conversation

in order to complete a

task using input from a

Greek text. |

| |

|

|

As

can be seen above, the speaking

test for the A1+A2 level

integrated test,[4]

as well as for the B1 and the B2

level tests consist of three

activities. The first one

involves questions relating to

candidates’ immediate

environment, work, hobbies,

interest etc., while the C1

level speaking test consists of

two activities the first of

which requires candidates to

respond to an opinion question.

The A1+A2 level speaking test

does not include mediation

activities which are included in

the B1, B2 and C1 level tests.

That is,

candidates at this level are

required to perform orally in

English, relaying information

they have selected from a source

text in Greek, so as to respond

to a given communicative

purpose.

The speaking

test procedure

The KPG

speaking test involves two

examiners and two candidates in

the examination room. One of the

two examiners is the

‘Interlocutor’, i.e., the one

who conducts the exam (in other

words, asks the questions,

assigns the tasks and

participates in the speech

event). The other is the

‘Rater’, i.e., the one who sits

aside silent and evaluates and

marks the candidates’

performance. The Interlocutor

also marks their performance,

once candidates have left the

room. Examiners alternate in

their role as Interlocutor and

Rater every three or four

testing sessions.

Examiners are trained for

their roles as Interlocutor and

Rater through seminars which

take place systematically

throughout the year. However, on

the day of the oral test,

examiners are given an examiner

pack which contains guidelines

regarding the exam procedure and

oral examiner conduct, and the

oral test material: questions,

tasks and rating criteria. These

are handed to examiners at least

two hours before the exam begins

along with the Candidate Booklet

which contains the prompts for

the exam (photos and/or Greek

texts, since one of the

activities involves mediation).

Assessment

criteria for oral production

The assessment criteria for oral

production reflect the

functional approach to language

use that the KPG exams adhere

to. The structure of the

assessment scales for the

different level speaking tests

are similar, while the nature of

the criteria depends on the

expectations for oral

production, illustrative

descriptors and can-do

statements for each level and on

the requirements of the speaking

activities. Thus for the A1+A2,

the B1 and the B2 level speaking

tests, the assessment criteria

are grouped under two main

categories: criteria for

assessing a) task completion and

b) language performance. As a

result, the Speaking Test Rating

Scale, which provides a

breakdown and description of

each criterion on the oral

assessment scale, is presented

in two parts. Part 1 presents

the Task completion

criteria, which have to do with

the degree to which the

candidates achieved the

communicative purpose of the

task, while Part 2 presents the

Language performance

criteria, which focus on the

quality of language output in

terms of pronunciation,

vocabulary, grammar and syntax,

and coherence (for A1+A2), and

in

terms of the candidates’

phonological competence,

linguistic competence,

sociolinguistic competence and

pragmatic competence for the B1

and B2 level speaking tests.

Given the requirements of

the C1 speaking test, the

assessment criteria are grouped

under two categories: a) overall

performance for tasks 1 & 2, and

b) assessment of task 2: the

mediation activity. As a result,

the Speaking Test Rating Scale,

which provides a

breakdown and description of

each criterion on the oral

assessment scale, is presented

in two parts. Part 1 presents

the Overall performance for

tasks 1 & 2 criteria which focus

on the quality of language

output in terms of the

candidates’ phonological

competence, linguistic

competence, appropriateness of

language choices, and cohesion,

coherence of speech and fluency;

Part 2 presents the Assessment

of task 2 criteria, which focus

on the interaction and mediation

skills of the candidates.

Developments and

research relating to the

speaking test

Assessment of

oral proficiency is a complex

and largely subjective process

in which many variables affect

the quality and quantity of

language output, while the

rating of performance ultimately

threatens the validity,

reliability and fairness of the

oral test procedure. Given that

one of the most significant

variables potentially affecting

candidate output and examiner

rating is the linguistic conduct

of the examiner (Bachman et al.

1995; Bonk & Ockey 2003;

Lazaraton 1996, McNamara 1996;

O’Sullivan 2000)[5],

the KPG exam system has

established a systematic,

intensive and on- going

programme of oral examiner

training.[6]

The goal of the training

programme is to develop a

database of approved oral

examiners; that is examiners who

have taken part in all training

seminars and whose performance

has been positively evaluated by

specially trained observers (see

Delieza, this volume) .

Another

aspect of the speaking test

being investigated

systematically is the

reliability of examiner marking.

This is monitored and assessed

through systematic inter-rater

reliability checks during/after

each exam administration. For

the speaking test, inter-rater

reliability is assessed through

the data collected by observers

who note the mark for each

candidate awarded by each

observed examiner. On the

observation form, the observers

also note their mark of

candidates’ performance. This

data is then collected by the

RCeL, where inter-rater

reliability estimates are

calculated and results are

interpreted.

Finally,

after each exam administration,

oral examiners are requested to

complete an oral test feedback

form which aims to elicit

feedback concerning the

potential problems with test

items, their usefulness,

appropriateness and

practicality. These results are

sent to the project team

directors, who share and discuss

them with their test development

teams. The project team

undertakes a systematic

post-examination review, weighs

the results of the exams, and

takes into consideration the

responses on examiner feedback

forms before deciding on

potential revisions/changes

deemed necessary for the

improvement of the test papers.

References

Bachman, L.

F., Lynch, B. K., & Mason, M.

(1995). Investigating

variability in tasks and rater judgements in a performance test

of foreign language speaking.

Language Testing, 12,

239-258.

Bonk, W.J., &

Ockey, G. (2003). A many-facet

Rasch analysis of the second

language group oral discussion

task. Language Testing, 20(1),

89-110.

Fulcher, G.

(2003). Testing Second

Language Speaking. Harlow:

Pearson Education Limited.

Karavas, K. (Ed.) (2008). The

KPG Speaking Test in English: A

Handbook. National and

Kapodistrian

University of

Athens,

Faculty of English Studies:

RCeL Publication

Series 2 (RCeL publication

series editors: Bessie Dendrinos

& Kia Karavas).

Kitao, S.K., & Kitao, K. (1996).

Testing Speaking. ERIC

Clearinghouse ED39821.

Lazaraton, A.

(1996). A Qualitative Approach

to Monitoring Examiner Conduct

in CASE. In M. Milanovic and N.

Saville (Eds.) Performance

Testing, Cognition and

Assessment, Studies in Language

Testing 3 (18-33). Selected

Papers from the 15th

Language Testing Research

Colloquium, Cambridge and

Arnheim, Cambridge: UCLES/Cambridge

University Press.

Luoma, S.

(2004). Assessing Speaking.

Cambridge:

Cambridge University Press

MacNamara, T.

F. (1996). Measuring Second

Language Performance.

London: Longman.

O’Sullivan,

B. (2000). Exploring gender and

oral proficiency interview

performance. System,

28, 373-386.